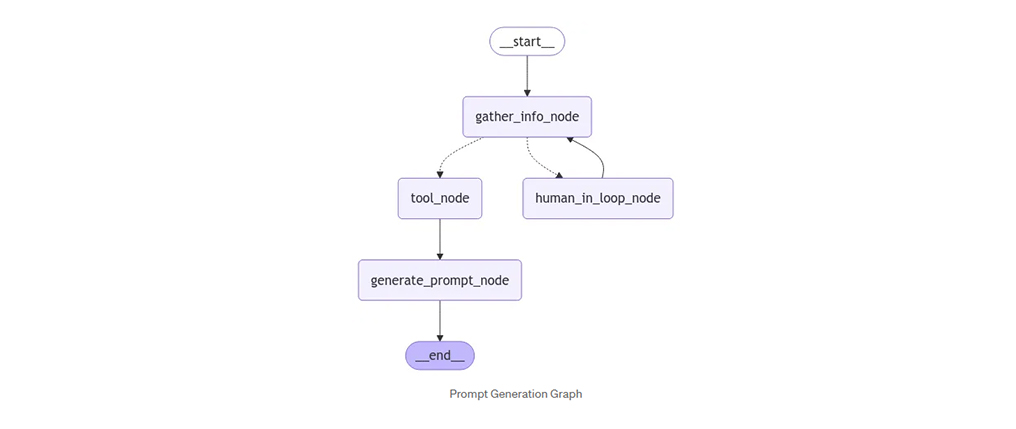

In this tutorial, we will implement a prompt generator by getting requirements from user. A human-in-the-loop mechanism is incorporated using the interrupt function to gather human input by temporarily halting the graph and is then resumed with Command class. This example builds upon the code provided in the LangGraph’s use case for prompt generator by introducing human involvement to enhance interactivity and control.

Overview

We’ll use LangGraph for workflow management, LangChain for LLM interactions, and Groq’s LLama-3 model as our language model.

- The system starts by gathering information about the prompt requirements through a series of questions.

- It uses a human-in-the-loop approach, allowing for interactive refinement of requirements.

- Once all necessary information is collected, it generates a prompt template based on the gathered requirements.

- The system can handle interruptions and resume where it left off, making it robust for real-world use.

Let’s dive into the implementation details!

Prerequisites

Before we begin, make sure you have the following:

- Python 3.12+

- Required packages: langchain, langchain_core, langchain_groq, langgraph, langsmith, python-dotenv

- API keys for Langchain and Groq

Setting Up the Environment

Create a .env file with the following keys:

LANGCHAIN_API_KEY=<your_langchain_api_key>

GROQ_API_KEY=<your_groq_api_key>

LANGCHAIN_TRACING_V2=<tracing_key_if_any>

Then, load these variables in your script:

import os

from dotenv import load_dotenv

load_dotenv()

os.environ["LANGCHAIN_API_KEY"] = os.getenv("LANGCHAIN_API_KEY")

os.environ["LANGCHAIN_PROJECT"] = "LangGraph Prompt Generator"

os.environ["LANGCHAIN_TRACING_V2"] = os.getenv("LANGCHAIN_TRACING_V2")

os.environ["GROQ_API_KEY"] = os.getenv("GROQ_API_KEY")

Step 1: Building the Prompt Generator LLMs using Langchain

Setting up the Language Model

We’ll use Groq’s LLama-3 model for this task:

from langchain_groq import ChatGroq

llm = ChatGroq(model="llama-3.1-70b-versatile", temperature=0)

Gathering Information from the User

We start by creating a model to gather necessary details from the user:

from langchain.prompts import ChatPromptTemplate, MessagesPlaceholder

from pydantic import BaseModel

from typing import List

class PromptInstructions(BaseModel):

objective: str

variables: List[str]

constraints: List[str]

requirements: List[str]

llm_with_tool = llm.bind_tools([PromptInstructions])

gather_info_prompt = """Your job is to get information from the user about what type of prompt template they want to create.

You must get the following information from them:

- What the objective of the prompt is

- What variables will be passed into the prompt template

- Any constraints for what the output should NOT do

- Any requirements that the output MUST adhere to

Ask user to give all the information.You must get all the above information from the user, if not clear ask the user again.

Do not assume any answer by yourself, get it from the user.

After getting all the information from user, call the relevant tool.

Don't call the tool until you get all the information from user.

If user is not answering your question ask again"""

gather_info_prompt_template = ChatPromptTemplate.from_messages([

("system", gather_info_prompt),

MessagesPlaceholder("messages")

])

gather_info_llm = gather_info_prompt_template | llm_with_tool

PromptInstructions is a Pydantic model that enforces data validation.

ChatPromptTemplate creates a template for the conversation

MessagesPlaceholder allows for maintaining conversation history

Generating the Prompt

Next, we define a model to create the prompt based on the gathered information:

generate_prompt = """Based on the following requirements, write a good prompt template:{reqs}"""

generate_prompt_template = ChatPromptTemplate.from_messages([

("system", generate_prompt)

])

generate_prompt_llm = generate_prompt_template | llm

Step 2: State Management

We define the graph’s state to manage messages and interruptions:

from langgraph.graph.message import AnyMessage, add_messages

from typing_extensions import TypedDict

from typing import Annotated,List

class State(TypedDict):

messages: Annotated[List[AnyMessage], add_messages]

is_interrupted: bool

Step 3: Creating Nodes

Here are the nodes that make up our graph:

Information Gathering Node:

from langgraph.types import Command

from langchain_core.messages import ToolMessage

def gather_info_node(state: State) -> State:

state["messages"] = gather_info_llm.invoke({"messages": state["messages"]})

if state["messages"].tool_calls:

return Command(update=state, goto="tool_node")

state["is_interrupted"] = True

return Command(update=state, goto="human_in_loop_node")

Checks if the gather_info_llm wants to use any tools and uses Command objects to control the graph flow, either moves to the tool node or interrupts for human input.

Tool Node:

def tool_node(state: State):

return {

"messages": [

ToolMessage(

content=str(state["messages"][-1].tool_calls[0]["args"]),

tool_call_id=state["messages"][-1].tool_calls[0]["id"],

)

]

}

Processes tool calls from the gather_info_llm and creates a ToolMessage with the tool’s output

Prompt Generation Node:

def generate_prompt_node(state):

state["messages"] = generate_prompt_llm.invoke({"reqs": state["messages"][-1].content})

return state

Takes the gathered requirements from tool_node and invokes the generate_prompt_llm to generate the actual prompt.

Human-in-Loop Node:

from langgraph.types import interrupt

def human_in_loop_node(state):

input = interrupt("please give the requested data")

state["is_interrupted"] = False

state["messages"] = input

return state

Interrupts the graph execution using interrupt function to requests input from the user. After getting the input the node resets the interrupted flag and updates the state with user input

Step 4: Building the Graph

from langgraph.graph import StateGraph, START, END

from langgraph.checkpoint.memory import MemorySaver

graph_builder = StateGraph(State)

graph_builder.add_node("gather_info_node", gather_info_node)

graph_builder.add_node("generate_prompt_node", generate_prompt_node)

graph_builder.add_node("tool_node", tool_node)

graph_builder.add_node("human_in_loop_node", human_in_loop_node)

graph_builder.add_edge(START, "gather_info_node")

graph_builder.add_edge("human_in_loop_node", "gather_info_node")

graph_builder.add_edge("tool_node", "generate_prompt_node")

graph_builder.add_edge("generate_prompt_node", END)

graph = graph_builder.compile(checkpointer=MemorySaver())

Create a new StateGraph with our State type. Add all the nodes to the graph, define the flow between nodes using edges and compile the graph for execution, uses MemorySaver for checkpointing.

Step 5: Implementing Graph Interaction

Finally, we create helper functions to interact with our graph:

from langchain_core.messages import HumanMessage

def invoke_graph(message, config, graph):

human_message = HumanMessage(content=message)

response = graph.invoke({"messages": [human_message]}, config=config)

return response

def resume_graph(message, config, graph):

human_message = HumanMessage(content=message)

response = graph.invoke(Command(resume=human_message), config=config)

return response

def display(response):

for message in response["messages"]:

message.pretty_print()

invoke_graph: Starts a new graph execution

resume_graph: Continues an interrupted execution, uses Command(resume=) to continue execution

display: Display the graph response

Step 6: Executing the Graph

The graph can now be invoked, and resumed as follows:

config = {"configurable": {"thread_id": 1234}}

message = input("Enter input message: ")

graph_values = graph.get_state(config).values

if "is_interrupted" in graph_values and graph_values["is_interrupted"]:

response = resume_graph(message, config)

else:

response = invoke_graph(message, config)

display(response)

- config: Uses configuration for thread management

- message: Gets user input

- Checks if the graph is interrupted

- Either resumes using resume_graph or starts new execution using invoke_graph

- Displays the response

Complete Code:

import os

from dotenv import load_dotenv

from pydantic import BaseModel

from typing import List

from typing_extensions import TypedDict

from typing import Annotated,List

from langchain_groq import ChatGroq

from langchain.prompts import ChatPromptTemplate, MessagesPlaceholder

from langchain_core.messages import ToolMessage,HumanMessage

from langgraph.graph import StateGraph, START, END

from langgraph.graph.message import AnyMessage, add_messages

from langgraph.checkpoint.memory import MemorySaver

from langgraph.types import Command,interrupt

load_dotenv()

os.environ["LANGCHAIN_API_KEY"] = os.getenv("LANGCHAIN_API_KEY")

os.environ["LANGCHAIN_PROJECT"] = "LangGraph Prompt Generator"

os.environ["LANGCHAIN_TRACING_V2"] = os.getenv("LANGCHAIN_TRACING_V2")

os.environ["GROQ_API_KEY"] = os.getenv("GROQ_API_KEY")

# Setting up the Language Model

def get_llm():

llm = ChatGroq(model="llama-3.3-70b-versatile", temperature=0)

return llm

# Gathering Information from the User

def get_gather_info_llm(llm):

class PromptInstructions(BaseModel):

objective: str

variables: List[str]

constraints: List[str]

requirements: List[str]

llm_with_tool = llm.bind_tools([PromptInstructions])

gather_info_prompt = """Your job is to get information from the user about what type of prompt template they want to create.

You must get the following information from them:

- What the objective of the prompt is

- What variables will be passed into the prompt template

- Any constraints for what the output should NOT do

- Any requirements that the output MUST adhere to

Ask user to give all the information.You must get all the above information from the user, if not clear ask the user again.

Do not assume any answer by yourself, get it from the user.

After getting all the information from user, call the relevant tool.

Don't call the tool until you get all the information from user.

If user is not answering your question ask again"""

gather_info_prompt_template = ChatPromptTemplate.from_messages([

("system", gather_info_prompt),

MessagesPlaceholder("messages")

])

gather_info_llm = gather_info_prompt_template | llm_with_tool

return gather_info_llm

# Generating the Prompt

def get_generate_prompt_llm(llm):

generate_prompt = """Based on the following requirements, write a good prompt template:{reqs}"""

generate_prompt_template = ChatPromptTemplate.from_messages([

("system", generate_prompt)

])

generate_prompt_llm = generate_prompt_template | llm

return generate_prompt_llm

def create_graph(gather_info_llm,generate_prompt_llm):

# State Management

class State(TypedDict):

messages: Annotated[List[AnyMessage], add_messages]

is_interrupted: bool

# Nodes

def gather_info_node(state: State) -> State:

state["messages"] = gather_info_llm.invoke({"messages": state["messages"]})

if state["messages"].tool_calls:

return Command(update=state, goto="tool_node")

state["is_interrupted"] = True

return Command(update=state, goto="human_in_loop_node")

def tool_node(state: State):

return {

"messages": [

ToolMessage(

content=str(state["messages"][-1].tool_calls[0]["args"]),

tool_call_id=state["messages"][-1].tool_calls[0]["id"],

)

]

}

def generate_prompt_node(state):

state["messages"] = generate_prompt_llm.invoke({"reqs": state["messages"][-1].content})

return state

def human_in_loop_node(state):

input = interrupt("please give the requested data")

state["is_interrupted"] = False

state["messages"] = input

return state

# Building the graph

graph_builder = StateGraph(State)

graph_builder.add_node("gather_info_node", gather_info_node)

graph_builder.add_node("generate_prompt_node", generate_prompt_node)

graph_builder.add_node("tool_node", tool_node)

graph_builder.add_node("human_in_loop_node", human_in_loop_node)

graph_builder.add_edge(START, "gather_info_node")

graph_builder.add_edge("human_in_loop_node", "gather_info_node")

graph_builder.add_edge("tool_node", "generate_prompt_node")

graph_builder.add_edge("generate_prompt_node", END)

graph = graph_builder.compile(checkpointer=MemorySaver())

return graph

# Implementing Graph Interaction

def invoke_graph(message, config, graph):

human_message = HumanMessage(content=message)

response = graph.invoke({"messages": [human_message]}, config=config)

return response

def resume_graph(message, config, graph):

human_message = HumanMessage(content=message)

response = graph.invoke(Command(resume=human_message), config=config)

return response

def display(response):

for message in response["messages"]:

message.pretty_print()

# Executing the Graph

llm = get_llm()

gather_info_llm= get_gather_info_llm(llm)

generate_prompt_llm= get_generate_prompt_llm(llm)

graph = create_graph(gather_info_llm,generate_prompt_llm)

config = {"configurable": {"thread_id": 1234}}

while True:

message = input("Enter input message: ")

graph_values = graph.get_state(config).values

if "is_interrupted" in graph_values and graph_values["is_interrupted"]:

response = resume_graph(message, config, graph)

else:

response = invoke_graph(message, config, graph)

display(response)

Example Response

Initial Input:

find largest of 10 numbers

Information gathered by

gather_info_node:

{'objective': 'Find the largest even number in a list of 10 numbers', 'variables': ['list of 10 numbers'], 'constraints': ['only consider even numbers'], 'requirements': ['output should be a float value']}

Final Generated Prompt by

generate_prompt_node:

Here's a good prompt template based on the given requirements:

"Write a function to find the largest even number in a given list of 10 numbers. The function should only consider even numbers from the list and return the result as a float value. The input list will contain 10 numbers, and the function should handle this list to produce the desired output."

Alternatively, if you want a more formal template:

"Given a list of 10 numbers, develop an algorithm to identify the largest even number within this list, ignoring any odd numbers. Ensure the output is returned as a float value, and provide a clear explanation of your approach."

Example input:

"Find the largest even number in the list [12, 45, 7, 23, 56, 89, 34, 6, 98, 11] and return the result as a float."

Expected output:

"The largest even number in the list is 98.0"

Complete Interaction Output:

================================[1m Human Message [0m=================================

Hi

==================================[1m Ai Message [0m==================================

To create a prompt template, I need some information from you. Can you please tell me what the objective of the prompt is? What do you want the prompt to achieve?

================================[1m Human Message [0m=================================

find largest of 10 numbers

==================================[1m Ai Message [0m==================================

So the objective of the prompt is to find the largest of 10 numbers.

Next, I need to know what variables will be passed into the prompt template. In this case, I'm assuming the variables are the 10 numbers. Can you confirm that? Are there any other variables that will be passed into the prompt template?

Also, are there any constraints for what the output should NOT do? For example, should the prompt avoid using certain words or phrases?

Lastly, are there any requirements that the output MUST adhere to? For example, should the output be in a specific format or should it provide explanations for the answer?

================================[1m Human Message [0m=================================

it will be a list of 10 numbers

==================================[1m Ai Message [0m==================================

So, to confirm:

* The objective of the prompt is to find the largest of 10 numbers.

* The variable that will be passed into the prompt template is a list of 10 numbers.

Next, I need to know about any constraints for what the output should NOT do. For example, should the prompt avoid using certain words or phrases, or should it avoid providing multiple answers?

Also, are there any requirements that the output MUST adhere to? For example, should the output be in a specific format, such as "The largest number is X", or should it provide explanations for why a particular number is the largest?

Please let me know about any constraints or requirements you have in mind.

(And if you're not sure, that's okay! I can ask follow-up questions to help clarify things.)

================================[1m Human Message [0m=================================

only find even numbers

==================================[1m Ai Message [0m==================================

So, to confirm:

* The objective of the prompt is to find the largest of 10 numbers, but only considering the even numbers in the list.

* The variable that will be passed into the prompt template is a list of 10 numbers.

As for constraints, I understand that the prompt should:

* Only consider even numbers when finding the largest number

Is that correct?

As for requirements, are there any specific requirements for the output? For example, should the output be in a specific format, such as "The largest even number is X", or should it provide explanations for why a particular number is the largest even number?

Please let me know if there's anything else I should know.

(And if you're ready, I can call the PromptInstructions function with the information we've gathered so far!)

================================[1m Human Message [0m=================================

output should be float

==================================[1m Ai Message [0m==================================

Tool Calls:

PromptInstructions (call_n8ce)

Call ID: call_n8ce

Args:

objective: Find the largest even number in a list of 10 numbers

variables: ['list of 10 numbers']

constraints: ['only consider even numbers']

requirements: ['output should be a float value']

=================================[1m Tool Message [0m=================================

{'objective': 'Find the largest even number in a list of 10 numbers', 'variables': ['list of 10 numbers'], 'constraints': ['only consider even numbers'], 'requirements': ['output should be a float value']}

==================================[1m Ai Message [0m==================================

Here's a good prompt template based on the given requirements:

"Write a function to find the largest even number in a given list of 10 numbers. The function should only consider even numbers from the list and return the result as a float value. The input list will contain 10 numbers, and the function should handle this list to produce the desired output."

Alternatively, if you want a more formal template:

"Given a list of 10 numbers, develop an algorithm to identify the largest even number within this list, ignoring any odd numbers. Ensure the output is returned as a float value, and provide a clear explanation of your approach."

Example input:

"Find the largest even number in the list [12, 45, 7, 23, 56, 89, 34, 6, 98, 11] and return the result as a float."

Expected output:

"The largest even number in the list is 98.0"

Conclusion

By combining LangChain, LangGraph, and Groq, we’ve built a robust and customizable prompt generation workflow. Human-in-the-loop mechanisms ensure flexibility, making it ideal for real-world applications.