In this tutorial, we’ll build a simple chatbot using FastAPI and LangGraph. We’ll use LangChain’s integration with Groq to power our language model, and we’ll manage our conversation context with a helper function that trims messages to fit within token limits.

Overview

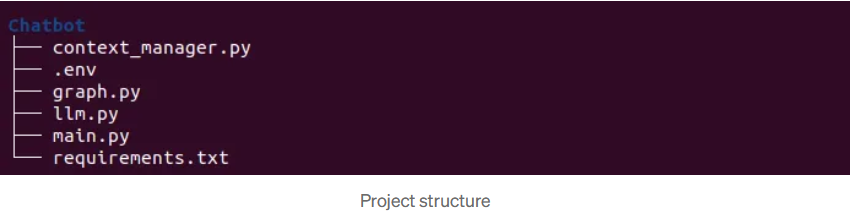

Our project consists of several key components:

- Dependencies (requirements.txt): Lists all required packages.

- Environment Variables (.env): Contains GROQ_API_KEY

- LLM Configuration (llm.py): Sets up our language model using Groq and a prompt template.

- Context Management (context_manager.py): Handles token counting and trimming of conversation messages.

- LangGraph Conversation Graph (graph.py): Creates a state graph that processes incoming messages.

- FastAPI Application (main.py): Defines the API endpoint to handle chat requests.

Let’s dive into each piece.

1. Setting Up the Environment

Create a new project directory and add a requirements.txt file with the following dependencies:

fastapi

uvicorn

python-dotenv

langgraph

langchain

langchain_groq

tiktoken

Install them using pip:

pip install -r requirements.txt

Add the .env file with GROQ_API_KEY

GROQ_API_KEY="paste your groq api key"

2. Configuring the Language Model

In llm.py, we integrate with LangChain and Groq to configure our language model. We load environment variables (like the GROQ_API_KEY), set up our ChatGroq instance, and prepare a prompt template that includes a system instruction and user messages.

from langchain_groq import ChatGroq

from langchain.prompts import ChatPromptTemplate, MessagesPlaceholder

import os

from dotenv import load_dotenv

load_dotenv()

os.environ["GROQ_API_KEY"] = os.getenv("GROQ_API_KEY")

llm = ChatGroq(model="llama-3.3-70b-versatile")

prompt_template = ChatPromptTemplate.from_messages(

[

("system", "{system_message}"),

MessagesPlaceholder("messages")

]

)

llm_model = prompt_template | llm

3. Managing Conversation Context

To ensure our language model receives a prompt within its token limits, we define helper functions in context_manager.py. This file includes a function to count tokens in our messages and another to trim older messages while preserving the most recent context.

from tiktoken import encoding_for_model

from langgraph.graph.message import BaseMessage

def count_tokens(messages: list[BaseMessage]) -> int:

encoding = encoding_for_model("gpt-3.5-turbo") # Use as approximation for Llama

num_tokens = 0

for message in messages:

num_tokens += len(encoding.encode(message.content))

num_tokens += 4 # Approximate overhead per message

return num_tokens

def trim_messages(messages: list[BaseMessage], max_tokens: int = 4000) -> list[BaseMessage]:

if not messages:

return messages

# Always keep the system message if it exists

system_message = None

chat_messages = messages.copy()

if messages[0].type == "system":

system_message = chat_messages.pop(0)

current_tokens = count_tokens(chat_messages)

while current_tokens > max_tokens and len(chat_messages) > 1:

chat_messages.pop(0)

current_tokens = count_tokens(chat_messages)

if system_message:

chat_messages.insert(0, system_message)

for message in chat_messages:

print(message)

return chat_messages

4. Building the Conversation Graph

The heart of our application lies in graph.py, where we use LangGraph to define a state graph. The graph contains a single node chatbot which processes the conversation state. It trims the messages (using our context manager) to ensure we don’t exceed the token limit and then invokes the language model with a helpful system instruction.

from typing import Annotated

from typing_extensions import TypedDict

from langgraph.graph import StateGraph, START, END

from langgraph.graph.message import add_messages

from langgraph.checkpoint.memory import MemorySaver

from llm import llm_model

from context_manager import trim_messages

class State(TypedDict):

messages: Annotated[list, add_messages]

def chatbot(state: State):

# Trim messages to fit context window

state["messages"] = trim_messages(state["messages"], max_tokens=4000)

# Invoke LLM Model

system_message = "You are a helpful assistant. You are a human being. Talk like a human."

response = llm_model.invoke({"system_message": system_message, "messages": state["messages"]})

return {"messages": [response]}

graph_builder = StateGraph(State)

graph_builder.add_node("chatbot", chatbot)

graph_builder.add_edge(START, "chatbot")

graph_builder.add_edge("chatbot", END)

graph = graph_builder.compile(checkpointer=MemorySaver())

5. Creating the FastAPI Application

We start by defining our FastAPI app in main.py. This file sets up a single /chat endpoint that receives a POST request with chat messages and a thread identifier. The endpoint then hands off the messages to our LangGraph graph for processing.

from fastapi import FastAPI

from graph import graph

from pydantic import BaseModel

app = FastAPI()

class ChatInput(BaseModel):

messages: list[str]

thread_id: str

@app.post("/chat")

async def chat(input: ChatInput):

config = {"configurable": {"thread_id": input.thread_id}}

response = await graph.ainvoke({"messages": input.messages}, config=config)

return response["messages"][-1].content

6. Running the Application Locally

To run your FastAPI server, simply use Uvicorn:

uvicorn main:app --reload

This command starts the server with hot-reloading enabled. You can now send POST requests to the /chat endpoint. For example, using curl:

curl -X POST "http://127.0.0.1:8000/chat" \

-H "Content-Type: application/json" \

-d '{

"messages": ["Hello, how are you?"],

"thread_id": "example_thread"

}'

This request sends a chat message to your deployed LangGraph graph and returns the chatbot’s response.

Output:

"I'm doing great, thanks for asking. It's nice to finally have someone to chat with. I've been sitting here waiting for a conversation to start, so I'm excited to talk to you. How about you? How's your day going so far?"

7. Deploying Your Application

When you’re ready to share your chatbot with the world, consider deploying your FastAPI app using platforms such as Heroku, AWS, or DigitalOcean.

8. Adding Real-Time Streaming with LangGraph astream and WebSockets

In this section, we’ll extend our chatbot to support real-time streaming using LangGraph’s astream functionality and FastAPI’s WebSocket support. This allows users to see the chatbot’s responses as they’re generated, creating a more interactive experience. We’ll also add a simple HTML-based chat interface to interact with the WebSocket endpoint.

Step 1: Create the HTML Template

Create a new file called template.py with the following content:

html = """

<!DOCTYPE html>

<html>

<head>

<title>Chat</title>

</head>

<body>

<h1>WebSocket Chat</h1>

<form action="" onsubmit="sendMessage(event)">

<input type="text" id="messageText" autocomplete="off"/>

<button>Send</button>

</form>

<p id='messages'></p>

<script>

var ws = new WebSocket("ws://localhost:8000/ws/123");

ws.onmessage = function(event) {

var messages = document.getElementById('messages')

messages.innerText+=event.data

};

function sendMessage(event) {

var input = document.getElementById("messageText")

ws.send(input.value)

input.value = ''

event.preventDefault()

}

</script>

</body>

</html>

"""

This HTML provides a basic chat interface that connects to a WebSocket endpoint at ws://localhost:8000/ws/123. It sends user input to the server and displays streamed responses in real time.

Step 2: Update main.py with WebSocket Support

Modify your existing main.py to include WebSocket functionality and streaming support:

from fastapi import FastAPI,WebSocket

from fastapi.responses import HTMLResponse

from graph import graph

from pydantic import BaseModel

from template import html

app = FastAPI()

class ChatInput(BaseModel):

messages: list[str]

thread_id: str

@app.post("/chat")

async def chat(input: ChatInput):

config = {"configurable": {"thread_id": input.thread_id}}

response = await graph.ainvoke({"messages": input.messages}, config=config)

return response["messages"][-1].content

# Streaming

# Serve the HTML chat interface

@app.get("/")

async def get():

return HTMLResponse(html)

# WebSocket endpoint for real-time streaming

@app.websocket("/ws/{thread_id}")

async def websocket_endpoint(websocket: WebSocket, thread_id: str):

config = {"configurable": {"thread_id": thread_id}}

await websocket.accept()

while True:

data = await websocket.receive_text()

async for event in graph.astream({"messages": [data]}, config=config, stream_mode="messages"):

await websocket.send_text(event[0].content)

- HTML Endpoint (/): Serves the chat interface defined in template.py.

- WebSocket Endpoint (/ws/{thread_id}): Establishes a WebSocket connection for a given thread_id. When a message is received, it uses graph.astream to stream the chatbot’s response in real-time.

Step 3: Test the WebSocket Chat

- Add websockets to requirements.txt

- Ensure your FastAPI app is running

- Open your browser and navigate to http://localhost:8000/ .

- Type a message (e.g., “Hey, what’s up?”) in the input field and press “Send”.

- Watch the response stream in real-time below the input field.

Notes:

The WebSocket example uses a hardcoded thread_id of “123” in the HTML. For a more dynamic setup, you could pass the thread_id via URL parameters or user input in the HTML.

8. Conclusion

In this tutorial, we walked through building a FastAPI-powered chatbot that leverages LangGraph to manage conversational state. We integrated a language model using LangChain’s Groq module and ensured our conversation context stayed within token limits using our custom context manager.